HNPU: Adaptive Fixed-point DNN Training Processor

본문

Overview

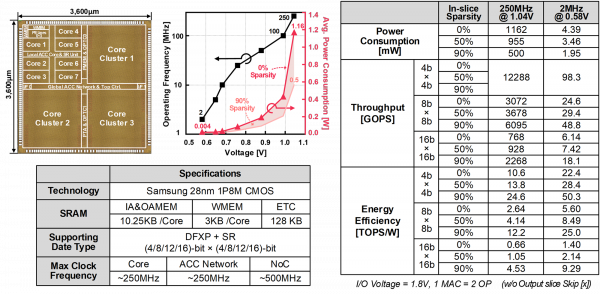

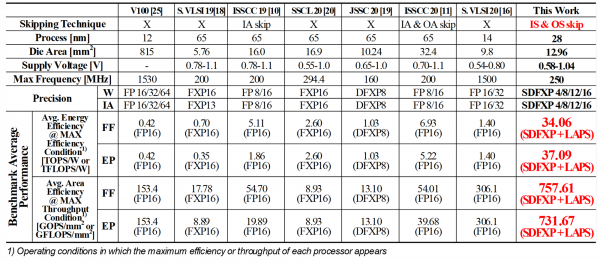

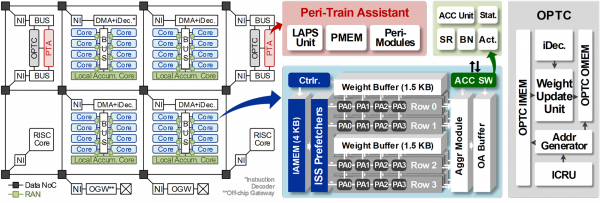

This paper presents HNPU, which is an energy-efficient DNN training processor by adopting algorithm-hardward co-design. The HNPU supports stochastic dynamic fixed-point representation and layer-wise adaptive precision searching unit for low-bit-precision training. It additionally utilizes slice-level reconfigurability and sparsity to maximize its efficiency both in DNN inference and training. Adaptive-bandwidth reconfigurable accumulation network enables reconfigurable DNN allocation and maintains its high core utilization even in various bit-precision conditions. Fabricated in a 28 nm process, the HNPU accomplished at least 5.9x higher energy-efficiency and 2.5x higher area efficiency in actual DNN training compared with the previous state-of-the-art on-chip learing processors.

Features

- Stochastic dynamic fixed-point number representation

- Layer-wise adaptive precision scaling

- Inout-slice skipping

- Adaptive-bandwidth reconfigurable accumulation network

Related Papers

- Coolchips 2021